At the Future Artificial Intelligence Pioneer Forum of the 2024 Zhongguancun Forum Annual Meeting, Tsinghua University and Biotech Technology officially released China's first long-duration, highly consistent, and highly dynamic video model - Vidu on the 27th.

This model uses the team's original architecture U-ViT, which integrates Diffusion and Transformer, and supports one-click generation of high-definition video content up to 16 seconds long and with a resolution of up to 1080P.

On April 27, at the Future Artificial Intelligence Pioneer Forum of the 2024 Zhongguancun Forum Annual Meeting, Tsinghua University jointly launched Vidu. Photo by China News Service reporter Chen Su

According to reports, Vidu can not only simulate the real physical world, but also has rich imagination, multi-lens generation, and high spatio-temporal consistency. Vidu is the world's first large-scale video model to achieve major breakthroughs since the release of Sora. Its performance is fully benchmarked against the top international levels, and it is accelerating iterative improvements.

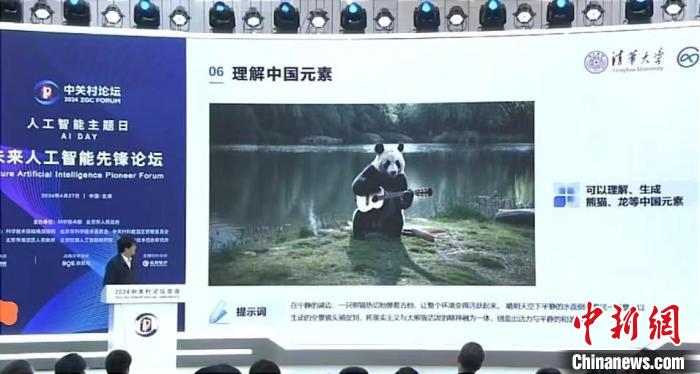

At the forum that day, Zhu Jun, professor at Tsinghua University and chief scientist of Shengshu Technology, said that, consistent with Sora, Vidu can directly generate high-quality videos up to 16 seconds based on the provided text description. In addition to breakthroughs in duration, Vidu has achieved significant improvements in video effects, which are mainly reflected in simulating the real physical world, multi-lens language, high spatio-temporal consistency, and understanding of Chinese elements.

On April 27, at the Future Artificial Intelligence Pioneer Forum of the 2024 Zhongguancun Forum Annual Meeting, Tsinghua University jointly launched Vidu. Vidu has achieved significant improvements in video effects and can generate unique Chinese elements, such as pandas and dragons. Photo by China News Service reporter Chen Su

"It is worth mentioning that Vidu adopts a 'one-step' generation method." Zhu Jun said that, like Sora, the conversion of text to video is direct and continuous, and the underlying algorithm implementation is based on a single model and is completely end-to-end. End-generation, does not involve intermediate frame insertion and other multi-step processing.

Zhu Jun said that Vidu’s rapid breakthrough comes from the team’s long-term accumulation and multiple original achievements in Bayesian machine learning and multi-modal large models. Its core technology, U-ViT architecture, was proposed by the team in September 2022. It is earlier than the DiT architecture adopted by Sora. It is the world's first architecture that integrates Diffusion and Transformer and is completely independently developed by the team.

Since the release of Sora in February this year, based on the in-depth understanding of the U-ViT architecture and long-term accumulated engineering and data experience, the team has further made breakthroughs in key technologies for long video representation and processing in just two months, and developed and launched the Vidu video large model. , significantly improving the coherence and dynamics of the video.

"The name Vidu not only sounds homophonic to 'Vedio', but also contains the meaning of 'We do'." Zhu Jun said that the breakthrough of the model is a multi-dimensional, cross-domain comprehensive process that requires deep integration of technology and industrial applications. He hopes to cooperate with the industry Enterprises and research institutions upstream and downstream of the chain strengthen cooperation to jointly promote the progress of large-scale video models.